The only scalable and robust analog in-memory computing for breakthrough efficiency and programmability

The only scalable and robust analog in-memory computing for breakthrough efficiency and programmability

In-Memory Computing (IMC)

In-memory computing greatly enhances compute efficiency and reduces data movement.

Robust Analog

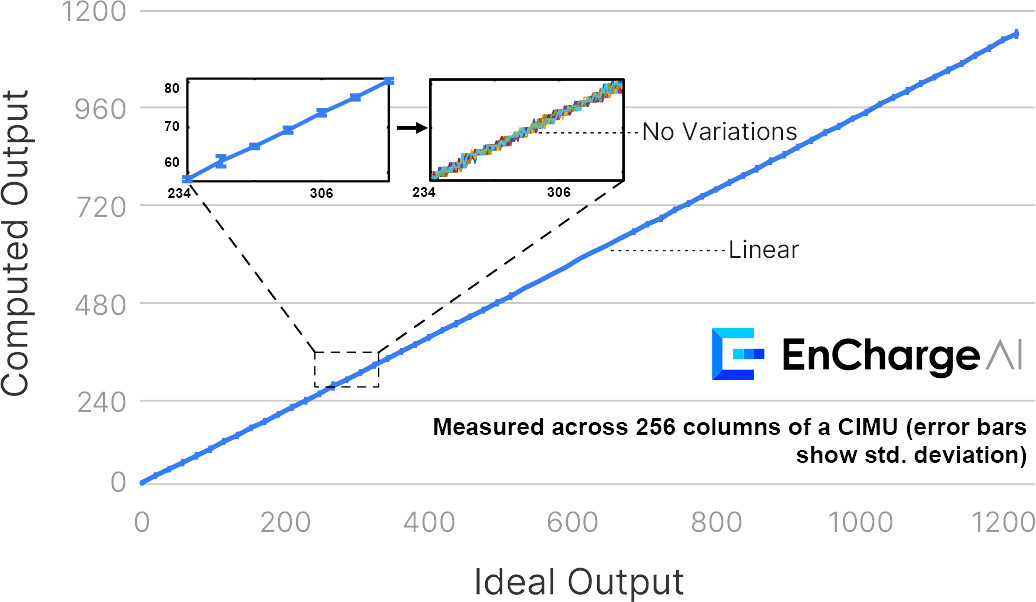

EnCharge AI’s innovation in charge-domain computation based on intrinsically-precise metal capacitors breaks traditional IMC tradeoffs, overcoming the SNR limitations of analog processing.

Current-based Analog IMC

Charge-based Analog IMC

(measured silicon)

Scalable

Technology scalability and maturity proven through 5 generations of designs, across multiple process nodes and scaled-up architectures.

Breakthrough Energy Efficiency

The highest demonstrated efficiency among both incumbents and new entrants for AI compute.

Fully Programmable and Scalable Execution

Seamless integration in user workflows and system deployments, with high-performance support across AI model types and operators.

Explore our Published Data

Sign Up for Updates

Join our mailing list for exclusive updates, releases, and exciting news from EnCharge AI.